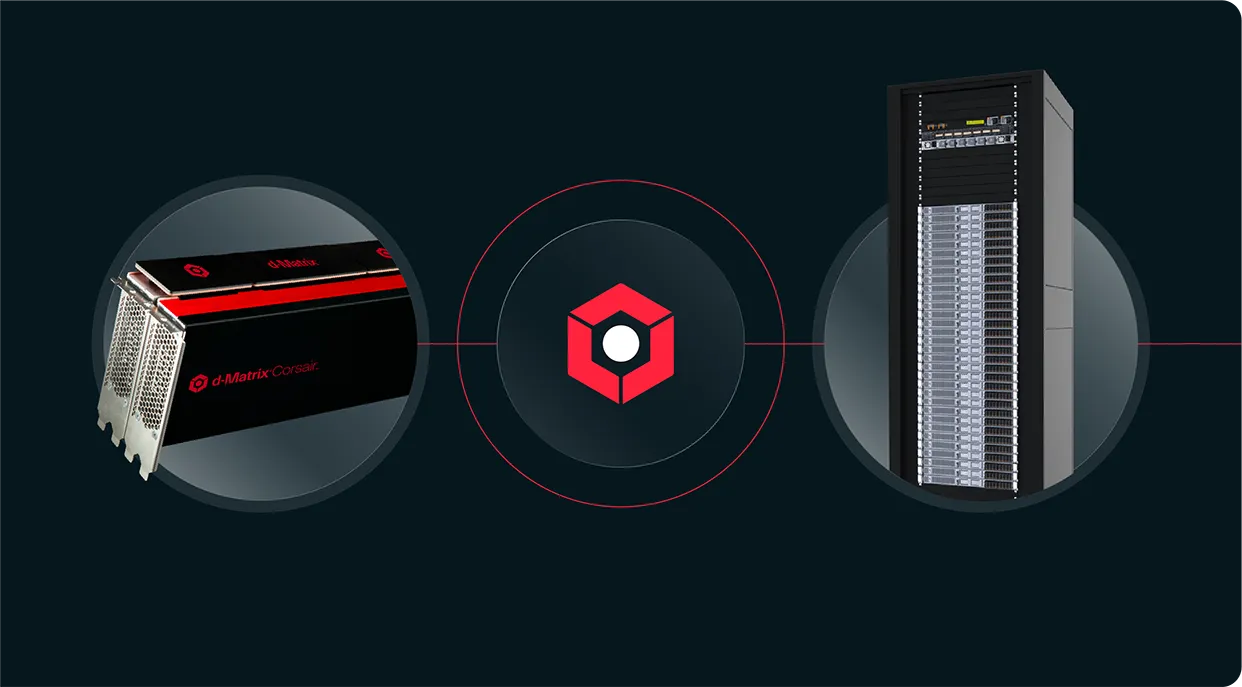

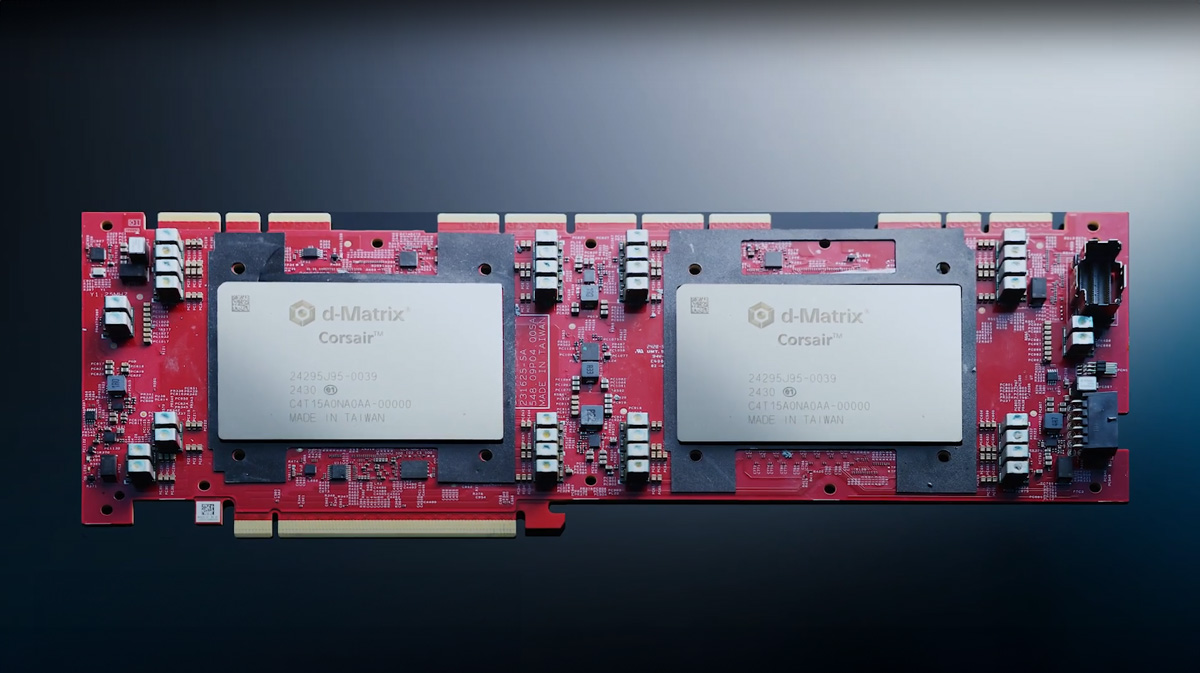

Meet Corsair™,

the world’s most efficient AI inference platform for datacenters

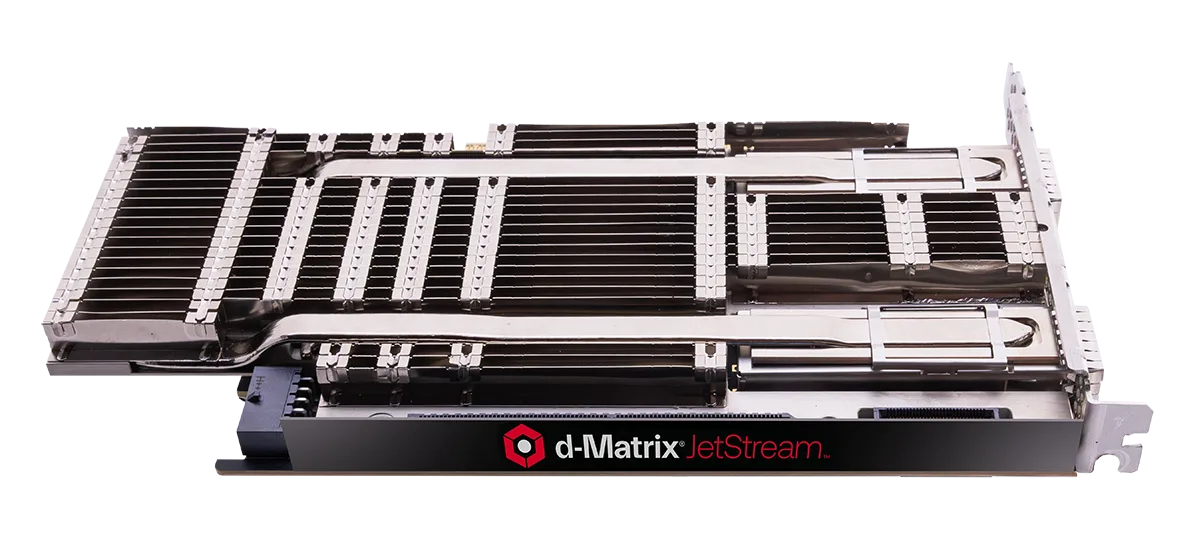

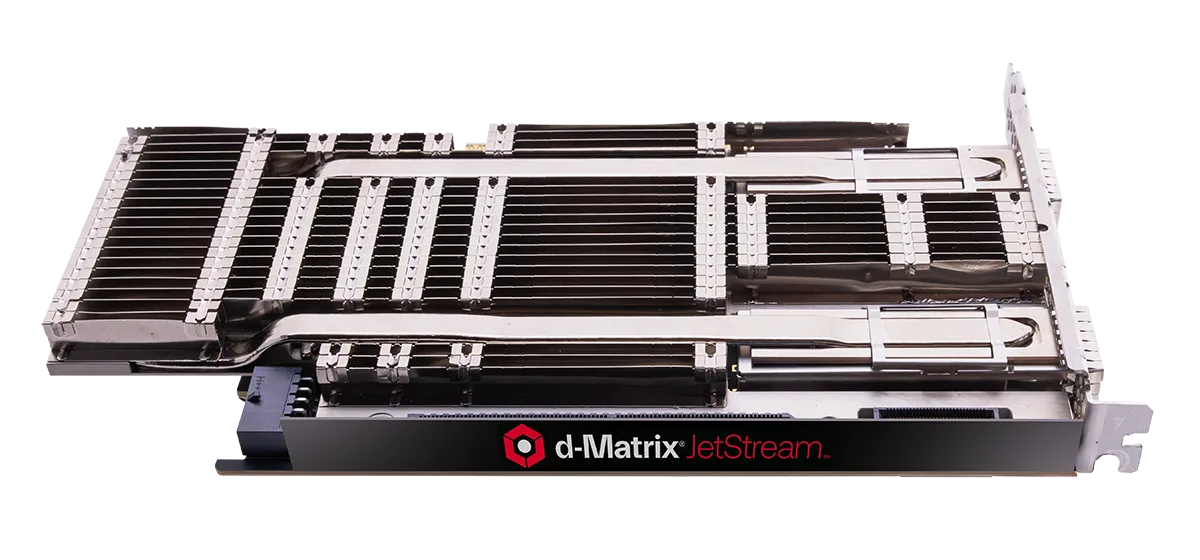

Scale with JetStream™, purpose-built I/O accelerator for AI inference

A next-generation blazing fast accelerator-to-accelerator communications platform that scales up to millions of requests.

Announcing SquadRack™, industry’s first rack-scale solution, purpose-built for AI inference, using a disaggregated standards-based approach. Built with industry-leading AI infrastructure providers.

Redefining Performance and Efficiency for AI Inference at Scale

Blazing Fast

interactive-speed

Commercially Viable

cost-performance

Sustainable

energy-efficiency

Built without compromise

Don’t limit what AI can truly achieve and who can benefit from it. We’ve built Corsair from the ground up, with a first-principle approach . Delivering Gen AI without compromising on speed, efficiency, sustainability or usability.

Performant AI

d-Matrix delivers ultra-low latency high batched throughput, making Enterprise workloads for GenAI efficient

Sustainable AI

AI is on an unsustainable trajectory with increasing energy consumption and compute costs. d-Matrix let’s you to do more with less.

Scalable AI

Our purpose-built solution scales across models and is enabling enterprises and datacenters of all sizes to adopt GenAI quickly and easily.