‘AI Inference’ has been trending everywhere, from keynote speeches to quarterly earnings reports and in the news. You have probably heard phrases like “inference-time compute”, “reasoning” and “AI deployment” and they are all related to this topic.

In this blog series, we will demystify AI inference – what it is, why it is important, what the challenges are, and how to tackle them. Let’s start at the beginning.

What is AI Inference?

Artificial Intelligence (AI) enables machines to perform tasks typically performed by humans – like understanding language, recognizing images, solving problems, and making decisions. AI inference, simply put, is the process of running an AI model to perform a specific task. For example, when you ask ChatGPT a question, it runs a Large Language Model (LLM) behind the scenes on your query – in other words, it performs AI inference – to give you an answer.

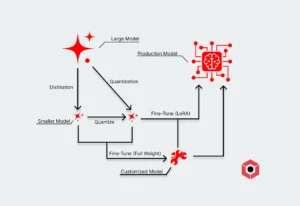

Before a model can run inferences, it must first be trained and tuned. Let’s step back and look at the various stages:

- Training: the process of teaching an AI model using large input data sets (think web-scale). The model learns to generalize patterns and rules from the data. This is sometimes referred to as a base model.

- Fine-tuning or post-training: the process of taking a base model and using smaller, specialized data sets to improve them for application in real-world use cases.

- Inference: the process of using the trained model to create predicted output on new data. In real-world deployment, it’s the inference step that creates responses to user requests.

How AI Inference show up in everyday life?

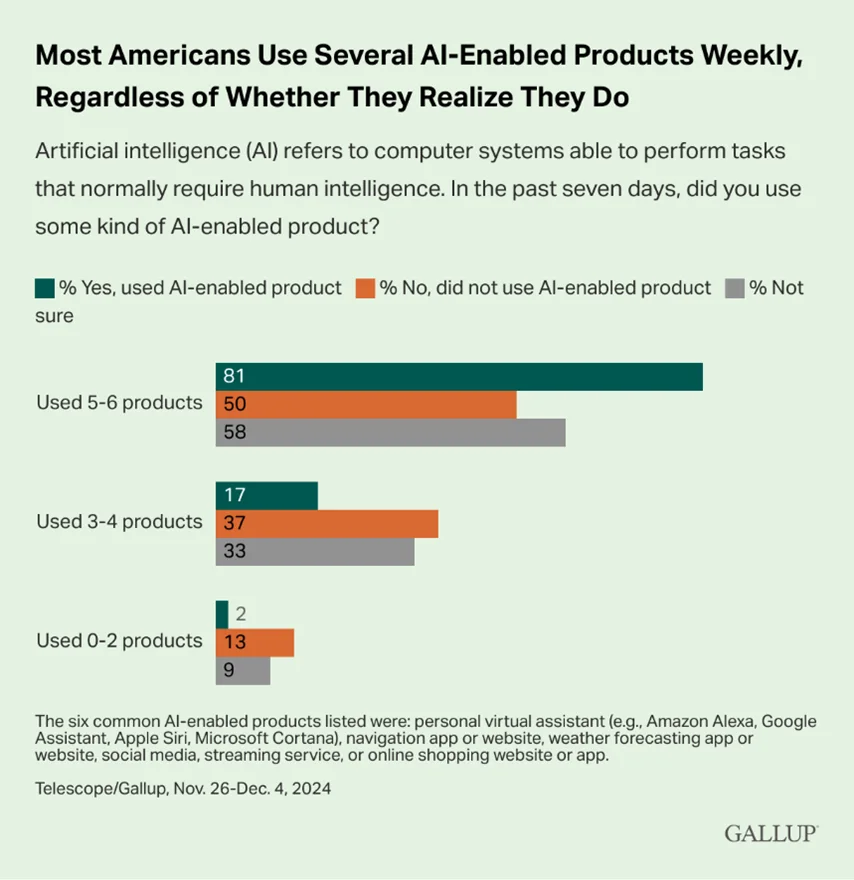

You encounter AI inference daily, often without realizing it. A recent Gallup survey highlighted that nearly all Americans use AI based products, but nearly two-thirds (64%) do not realize it (source). Everyday products run AI models under the hood to accomplish various tasks. Examples include:

- Unlocking your smartphone with facial recognition

- Google showing personalized search results

- Netflix recommending movies you might enjoy

- Gmail filtering spam email

- Smart assistants responding to voice commands

- ChatGPT responding to user queries

What is unique about Generative AI Inference?

The computational resources required to perform AI inference vary based on the models and use cases. Conventional use cases like image perception, voice, or recommendations use smaller AI models and require fewer resources, often running on a single GPU or accelerator card.

However generative AI models are orders of magnitude bigger, scaling to hundreds of billion or even trillion parameters, and more resource intensive. They need multiple cards or servers to perform inference, consuming significantly more computational resources and energy. For the end user, this results in increased costs and slower response times.

Source : Charting Disruption’25 series

Why has AI Inference become important now?

The latest breakthroughs in Generative AI have led to an explosion in AI usage – a perfect storm of more applications, more users, and more frequent usage per user. For example, ChatGPT is estimated to have over 500 million weekly active users and processing over a billion queries every day. This has led to a dramatic increase in GenAI inference volume. Coupled with its large computational requirements, the end result is excessive pressure on data center resources. We need more compute hardware, more energy and more physical space to keep up with the demand. This poses significant challenges for AI infrastructure on all fronts – performance, cost, sustainability, and scalability.

In the following blogs of this series, we will dive deeper into these implications for AI infrastructure and how it is evolving to keep up. AI datacenters would need to innovate all around to provide a reliable and performant user experience while keeping costs in check. We will break down what this means for the compute platform and where innovation is needed across the inference software stack as well as in the hardware accelerators that power these workloads. Stay tuned.