Right after the launch of ChatGPT in November 2022, industry observers predicted the rise of a brand-new class of engineers: ones specializing in prompt engineering, crafting the perfect prompts for any AI-powered use case.

That has pretty much been thrown out the window in exchange for a new kind of mental model: context engineering. Instead of creating the perfect prompt, context engineering focuses on optimizing both the prompt and everything around the prompt. That includes the data going into it prompts, where that data is coming from, configurations for LLM workflows, and a variety of other functions.

As new models come out every six months or so that one-up the prior ones—whether that’s open weight models like DeepSeek and Kimi K2 or frontier models like Claude and ChatGPT—the strategic tools around those models remain critical. AI models, regardless of how powerful they are, are only as good as the pipelines and processes built around them.

Context engineering is composed of a whole suite of both software and hardware functions that go well beyond the old-school method of just pulling an open model off Hugging Face and running it on some rented H100s. Instead, we’re talking about a process that has upwards of a dozen steps to truly optimize an AI workflow—and get the most out of an AI model.

The best applications require best-in-class context engineering tools that surround these models. And unlike swapping AI models in and out as soon as the next best one comes out, context engineering tools require significantly more work to get right.

The models may change overnight, but a well-structured data pipeline and processing that sits underneath those powerful models in the first place will always be critical.

What is context engineering?

Context engineering refers to the class of functions that surround a prompt and response for an AI model, from managing LLM workflows to optimizing RAG-based architectures to get the most bang for your million tokens. The term has been around for a few months but has gained traction among top AI researchers like Andrej Karpathy that consider it a natural next step to prompt engineering.

Context engineering today might include:

- Optimizing token usage that hits a sweet spot between total cost and response quality, allowing certain types of responses to scale up.

- Managing memory across sessions, like ChatGPT and Claude, to ensure that there is a constant context base available to personalize a response.

- Embedding and retrieving the most accurate and important information in RAG architecture.

- Enabling agentic workflows by breaking complicated steps down into simpler ones that task-specific models can handle.

- Evaluating the success of models to create a constant feedback loop to improve the user experience, such as through updating memory or shifting the inputs of a model.

This is thanks to much more advanced systems and models coming out of organizations and research labs. Previously, only trivial RAG was available to augment a context window (at the time also referred to in-context learning). Newer models offer advanced reasoning capabilities—including reasoning traces in the case of DeepSeek—and smaller performant models allow for complex chains and agentic networks. Orchestration tools now also exist to augment these workflows.

That’s vastly increased the potential of AI workflows, but it also comes at the cost of complexity. Sticking with elementary retrieval techniques that were available circa 2024 would send your costs through the roof. Modern advanced workflows require not just intelligent context, but management of the whole process to optimize cost and performance—both in terms of throughput and the quality of the response.

For example, when RAG gained popularity last year, you would use a simple embedding and retrieval setup with a vector database like Pinecone and models from Cohere. There are now a much larger number of vector databases and embedding and retrieval models (including newer re-ranking models), and newer techniques like implementing graph search on top of standard RAG. Workflows now enable developers to break complex problems down from firing off a colossal prompt and hoping for the best to smaller, more targeted tasks that offer much more flexibility—such as stopping at a specific point, or routing through multiple different scenarios across a variety of different models.

The cost of using AI models has dropped dramatically, both from endpoint providers and from the actual cost of renting hardware. But declining costs don’t necessarily mean you would do less and save money—instead, you’re probably more likely to do more with the same amount of money. And context engineering is both about saving money and making that doing more part possible.

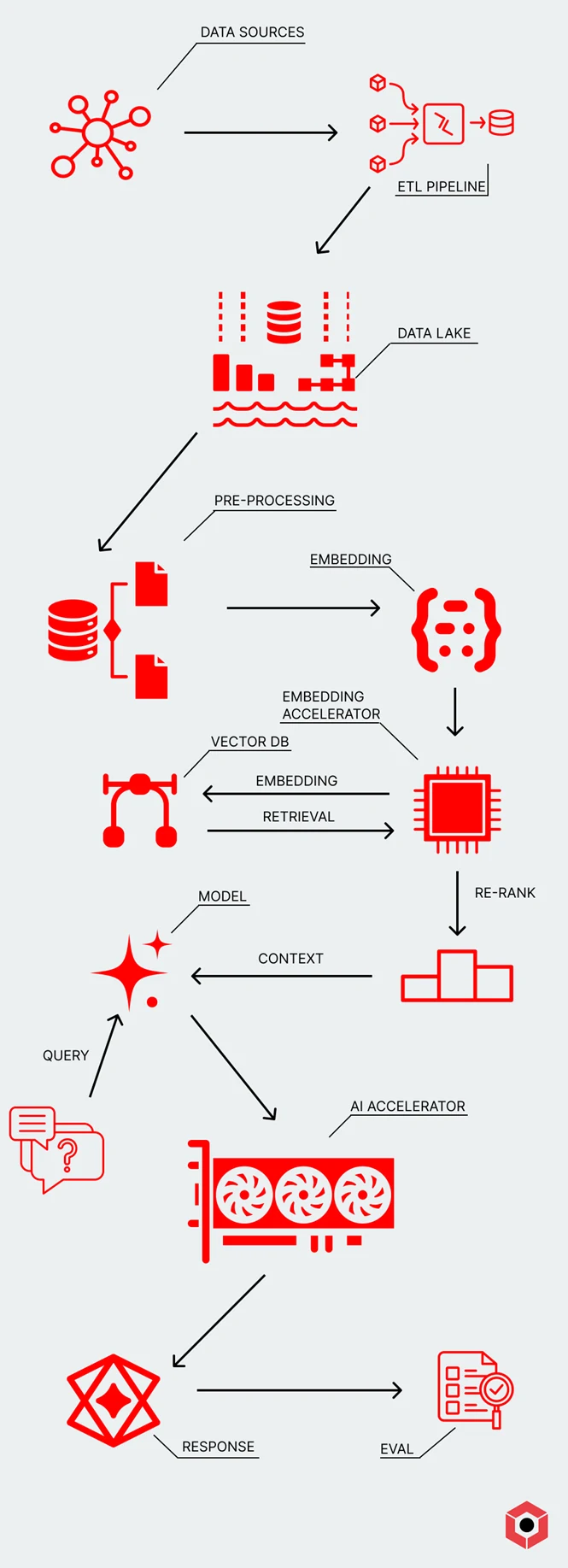

What’s in an actual context engineering pipeline?

Let’s use a classic example for a more common AI-based application: a RAG-powered chatbot for customer service use cases. In this scenario, we’d have a customer coming in and asking some kind of question to a chatbot, which you’d want to retrieve detailed information from an FAQ before sending them to a customer service agent.

- Generating or making high-quality data available in the first place, such as ensuring you’re not just pointing a firehose at the pipeline.

- ELT (extract-load-transform) or ETL connectors that ingest data in the first place. You’ll use this data either in the form of AI training, test, and validation sets, or for RAG-based applications (such as chatbots).

- Data lakes that will store the data, either in structured or unstructured format, prior to pre-processing and embedding.

- Pre-processing layers that take that data and conform it into a semi-structured format before placing it into an embedding pipeline. This can include chunking before the embedding process.

- Embeddings layers that convert the data into a vector format that you’ll use for AI-related applications.

- A hardware accelerator for embeddings models that support RAG-based applications.

- A vector database that stores embedded information for AI-based applications and makes it available in a variety of scenarios, such as high-performance RAG-based applications. This can include options like Pinecone or open standards like LanceDB and Qdrant.

- An additional embedding layer for retrieving relevant context, both in the form of the base embedding model and a re-ranking model that ensures the highest accuracy retrieval.

- A hardware accelerator for the inference itself, which uses the base model and retrieved information to provide a high-quality output. This can include GPUs or custom accelerators.

- Evaluation tools to determine the success of the response, which can be both standard performance metrics like throughput and custom internal metrics.

The pipeline here, though incredibly complex, offers an enormous amount of flexibility for optimization. You could fine-tune an embedding model rather than pull it off the shelf. You could also pick a vector database that suits your unique needs. You might have a custom evaluation harness to measure the subjective success of your model. And you have more freedom to decide what data you get and store if your pre-processing layer handles edge cases.

Each of these individual steps are also improving over time—like embedding and retrieval models—meaning you have the flexibility to adjust it one step at a time to best suit your needs.

Where the silicon sits in all this

You could think of context engineering as everything that happens before and after an accelerator fires up and processes a prompt and its associated response. That’s historically just been a bunch of the same GPUs you have sitting around, or the GPUs that are powering the models from organizations like OpenAI.

But the immense flexibility that comes with a more nuanced data pipeline also offers yet another place to optimize an AI workflow: selecting the best hardware (or provider) in the first place. If you want the path of least resistance, you might just fire everything off in a prompt to one of OpenAI’s models.

Alternatively, if you are looking at needs around governance and sovereignty, you can pick and choose the best hardware that makes sense for your needs. Searching across a codebase with DeepSeek powered on A100s before passing a much more limited request off to Claude is a completely viable option today thanks to the flexibility and ease of use of many tools.

It’s also opened the door to nontraditional hardware, including custom accelerators like Google’s TPUs and accelerators from a whole class of startups (including ourselves). Model routing—which includes selecting the best hardware for the job—is just one part of the orchestration part of context engineering.

What is AI Inference and why it matters in the age of Generative AI

Discover what AI inference is, why it's making headlines, and how it powers real-world applications like ChatGPT in this introductory article.

Learn moreWhy there’s a shift to a new mental model around context engineering

The speed of model production is faster than it’s ever been. DeepSeek R1, its 671B parameter model, came out in January this year. We’re around 6 months later, and it’s already been challenged by another open weight model, Kimi K2. In between the two, Alibaba also released the Qwen family of models in April this year, alongside Microsoft’s new Phi model family.

And as each model improves, why wouldn’t you just use the latest one—especially if it already outperforms a model that you initially fine-tuned?

While models might change every six months, the data pipelines still effectively govern the success of an AI-powered product. Products in each step of that pipeline are slowly adopting new techniques and offering more advanced features to further optimize a data pipeline.

As more capable models come out, all those tasks that were out of reach are increasingly possible. Agentic networks are not just an option now, but an ideal way forward to both create the best possible product and optimize the cost burden to organizations (and users).

Advancements and breakthroughs in AI research are one obvious way that AI workflows are going to enable new products and ideas. But making some of the most complex tasks cost efficient and possible—either for smaller organizations or to scale up massively for larger ones—will breed new ideas as well.