The future of AI workflows is almost certainly going to be multi-modal agents. But rather than incredibly complex, compute-hungry multimodal models, there’s already a much easier pathway to get there.

We might think about some kind of autonomous super-model that can take over and complete any kind of arbitrary task when we talk about agents. The reality is agentic networks are already here, but exist in a much more primitive form moving through a series of semi-complex but unique tasks dependent on the last one. And these networks already deploy different kinds of modalities.

That doesn’t make these kinds of early agents less valuable or a kind of toy. In fact, agentic networks that link together these kinds of models with multiple modalities drive actual value by adding a level of flexibility, efficiency, and control to AI workloads. And you can build one with models right off the shelf with a much smaller footprint.

Some of the earliest models for modern generative AI were different modalities. Whisper focused on voice, while CLIP focused on image recognition. OpenAI had an early embeddings system with its ada model series. And we’ve entered into a realm where there’s a variety of these kinds of single-modality models in the open source community.

- Image-to-text models like CLIP and Llama 3.2 Vision.

- Voice-to-text models like Whisper and Canary Qwen 2.5.

- Text/image-to-video models like Wan2.1 or Mochi.

- Text/image-to-image models like Stable Diffusion and Flux.

- Text-to-speech models like Dia and Chatterbox.

- Text-to-text like, well, pick one of the hundreds.

Most importantly, open source models give developers optionality and flexibility for their workflows. Rather than relying on a single point of failure—like an API call to OpenAI—developers can decide exactly how their applications work while improving efficiency and addressing more particular needs, like stricter sovereignty requirements.

New open source models also come out on a relatively quick cadence across all modalities. Many large organizations and startups are vying to put out the best possible version of these open source models. And that kind of competition has led to incredible innovation and a massive push for performance.

Current-generation agentic workflows might not be the most exciting thing to talk about at the dinner table. But they generate an enormous amount of value for developers willing to put the time into building them.

Early agentic workflows excel when using task-specific open source models

These agentic workflows already make sense in a lot of current applications, even if these use cases are pretty narrow. You can break an otherwise relatively complex list of tasks into small steps and give them each a task-specific model, rather than hand each step over to OpenAI and assuming it’ll give you the performance and uptime requirements needed.

For example, a workflow might involve a voice input—like a phone call—before generating the final response needed on the other end in voice form. And there will always be a place where customers are interacting with a phone call, and expect some kind of voice response on the other end.

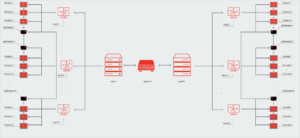

So you end up like a chain like this:

Voice-to-Text (Whisper) –> Your AI Workflow –-> Text-to-Voice (Chatterbox)

In this case, latency and accuracy (whatever your definition is) governs whether it’s a more lifelike experience. The goal is to make an experience comparable to talking to someone on the other end of the call. And there isn’t necessarily an option to tell a multimodal model developer, hey, can you make this thing faster?

You can, right now, assign those tasks to different, even smaller models. Models like Whisper’s largest version have a memory footprint in the sub-2GB range that operates well in parallel. Some voice-to-text models like Chatterbox are under 100MB. And imagine if you have 20GB to work with, you’re left with another roughly 18GB of memory to spare to do… whatever you want with it!

You can also customize each of those smaller models with more use case-specific data, like an archive of phone calls. And you can assign those smaller models to a different class of accelerators that specialize in high-performance deployments of both sides.

Developers could use a more multimodal model to handle the series of tasks, or they could focus on the two more custom parts that have to be absolutely perfect from any perspective. Everything in the middle isn’t really that complicated, so you just throw a fast, fine-tuned open source model like OpenAI’s newest one or a smaller model like Qwen and Llama. (And you could use custom inference accelerators specialized in Text-to-Text model deployment.)

Some newer open source models include capabilities like voice and image inputs. These models do work, though they come at a certain cost. They’re larger in size, and due to their multimodal nature, you lose a degree of specialization in each area.

Improvements are also predicated on updates to the entire model, and not just certain components of it. Llama 3.2, for example, has a vision-based model. But while the Llama 4-series models were multi-modal, they are also huge and designed to be more one-size-fits-all.

Meanwhile, OpenAI has continued to release updates to Whisper, further improving its transcription capabilities. Since the launch of Whisper, there have been two new releases. These models are relatively lightweight and, perhaps more importantly, can be fine-tuned on specific data to improve accuracy.

Developers can also further customize each of these models with fine-tuning. But rather than fine-tuning entire large multimodal models, they can fine-tune just the thing they want, like CLIP or Whisper, while ignoring the rest of the multimodal agentic flow.

Why the future of agents will be a vast array of models of differing modalities

Future AI workloads will need a variety of inputs. That can include voice interfaces like phone calls, image-based interfaces like photo editing, or text-based interfaces for chat bots. But they’ll also need a variety of outputs, voice clips, images, videos, and regular text. Both sides of that workflow have more task-specific models that excel without the need to muck around with a general purpose multimodal model.

Developers can build those workloads today. The tools are already in place to create an efficient network with significantly more control over what is the most important part of an application. Again, it’s not a kind of technology that would capture the imagination of someone at a bar—but it’s here today, and it works.

To recap here:

- Large, multi-modal models have longer release timelines to optimize on their larger array of one-shot use cases.

- They carry a larger memory footprint, making it more difficult to running parallel tasks with efficient performance.

- Smaller, modality-specific models provide a flexible approach that allows developers to mix and match for their specific needs.

- Modality-specific models are much more approachable for fine-tuning, further improving their performance.

- Modality-specific models allow developers to focus on areas where they need to get the best performance. Some applications may have extreme transcription speed requirements, while others need to run a large number of image recognition tasks in parallel.

- Modality-specific models improve on different timelines than general purpose multi-modal models.

And the number of modalities is also likely to increase as well! Some target use cases involve taking over a browser experience to accomplish some arbitrary task, like booking a flight. That experience, though, is a series of scrolls and clicks—individual tasks that only requiring understanding the next thing to click. You could see how a more sophisticated network, which could include optimization in function calling to “click around,” could accomplish a task like that.

To be sure, some multimodal models will have a place in future AI workflows. They dramatically lower the barrier to building out applications that require separate modalities. You don’t have to worry about managing both CLIP and Llama at the same time. You can instead just throw the Llama 3.2 vision model into it.

That approachability is very important for the broader ecosystem as well. It allows developers to get a “taste” of what different modalities can accomplish. General purpose APIs have done a great job of this as well, like OpenAI’s more recent GPT-series releases. Getting a feel for these workflows sparks ideas and exposes new use cases.

The challenge is when companies are ready to graduate those kinds of multi-modal workflows into large-scale use cases. Costs and latency quickly rise when relying on more general-purpose models. But developers can quickly find that they can create similar workflows that are substantially more efficient at a small complexity penalty. And that complexity penalty is starting to decline.

Custom inference accelerators can follow the important use cases as they emerge, instead of needing to be a one-size-fits-all solution. They can address pain points around cost, latency and GPU availability.

And, if anything, customers have the opportunity to mix and match accelerators to their specific performance anchors ostensibly requirements. You might assign a certain modality to one that specializes in speed or high-volume efficiently while still working with Nvidia hardware for colossal models that operate on a different set of requirements.

Splitting an agentic task across modalities offers another incredible way to lower costs and be much more efficient. Managing a network of task-oriented agents has also become significantly easier thanks to improvements in deployment and orchestration.

We’ll get to those mythical do-it-all agents soon enough. But for now, we have more than enough tools to build a significantly better—and much more satisfying—AI-based experience.