Large-scale AI workloads are deployed by stringing together many cards and many nodes. Any increase in model size, context length or users impacts the number of cards needed. Gone are the days of deploying multiple inference models on a single card. The only way to deploy inference services at scale in datacenters is to build and scale multi-node inference clusters.

At d-Matrix, we have been laser focused on AI inference acceleration since the beginning. We built Corsair to break down the memory wall. By addressing the memory bandwidth bottleneck with innovative memory-compute integration, we deliver blazing fast AI inference. With Corsair, every microsecond counts.

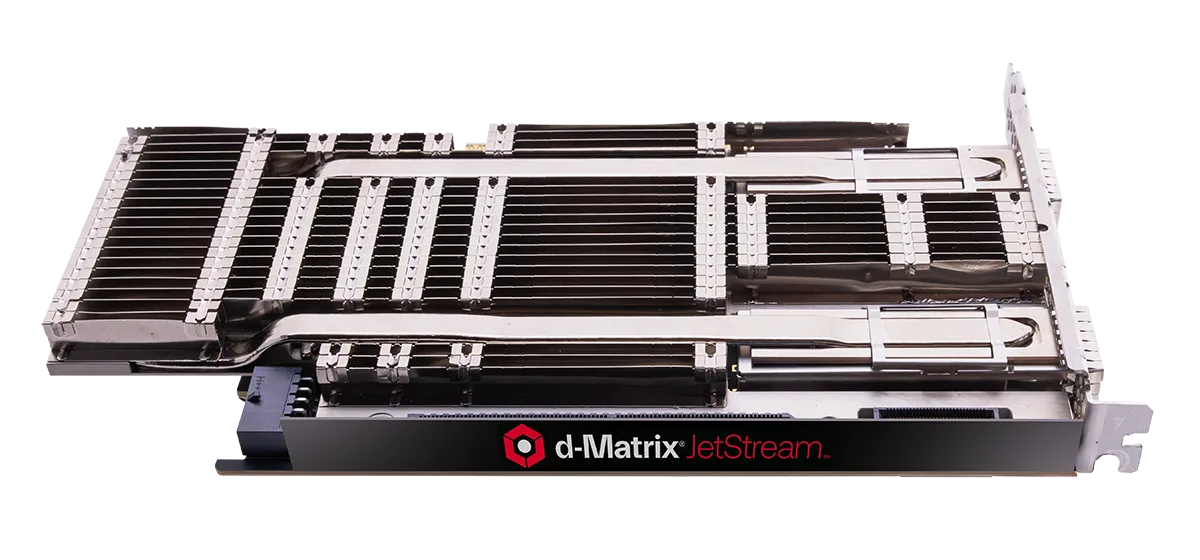

For multi-node scaling, we quickly found that standard NIC solutions weren’t going to cut it for our aggressive inference acceleration goals. So, we set about building a new solution ourselves and address this I/O bottleneck. We designed JetStream, a custom I/O acceleration card, to deliver our biggest value proposition — batched, high throughput processing with extremely low latency — to our customers.

How we separated the data and control plane in inference

Corsair performs asynchronous data transfers through device-initiated communication to optimize latency. We avoid the host-device communication bottleneck by separating the data-plane from the host and host-device control plane. We needed this to happen both within a server and across multiple servers to communicate across Corsair cards.

We considered using traditional RDMA NICs, but they wouldn’t achieve the latency requirements, much less scaling up to take full advantage of our in-memory compute engines. We needed to preserve decoupled execution and avoid any additional latency from the software overhead of traditional Ethernet NICs. In short, we didn’t need the full ethernet protocol—just a portion of it.

JetStream, our Transparent NIC and streaming solution, running over Ethernet, enables us to do exactly that: creating accelerator-to-accelerator scale-up communication, while using standard ethernet-based scale-out infrastructure. It lets us take full advantage of our in-memory compute while seamlessly scaling out to hundreds of nodes. With JetStream, we unify scale-up and scale-out with an ethernet fabric, using the standard off-the-shelf ethernet switches, optical and copper interconnects, and leverage the same tools and telemetry.

Building for the future of AI inference

We are not alone in realizing that the industry needs better approaches for AI accelerators to communicate more effectively. Consortiums such as UALink and UEC have emerged to address these very challenges. As members of both, we are collaborating with AI infrastructure companies developing these standards. For our future products, we are developing I/O chiplet aligned to SUE or UALink standards and it will be integrated with Raptor, our next generation AI accelerator. In the meantime, we needed a solution now for Corsair, so we built JetStream: aligned in spirit, and as a precursor to these.

We needed to become a multi-product company to achieve our goal of accelerating AI inference comprehensively. We had extreme latency requirements, high throughput goals, and the challenge of scaling that all out—all interconnected problems that are distinct in many ways. We emphasize co-designing software and hardware to deliver the best inference solutions, and that has forced us to innovate across compute, memory and networking to ensure we are delivering on our promise to customers.