Today, some of the most prevalent AI-powered applications are highly interactive ones: coding companions, customer service chatbots, and others.

While they all have different measures of success, they’re increasingly aligned on one thing: the user experience is the success metric, not some benchmark. Standing in line, it turns out, is much more annoying than your throughput being a little bit faster than your competitor.

When it comes to interactive apps, that user experience is almost directly governed by latency. That wait time in line, though, has a knob you can turn directly: batch size.

Lowering a batch size means a snappier, lower-latency user experience; while raising it increases overall throughput (and improves the economics). And the choice of batch size is increasingly becoming a critical architectural and operational decision when building AI-powered applications.

And it’s also increasingly clear that homogenous AI inference pipelines powered by GPUs—which are incredibly sensitive to batch size—aren’t necessarily the best path forward. Instead, more heterogeneous pipelines that integrate custom accelerators offer a way to optimize that user experience and potentially enable new ones.

We’re now reaching a point where a much more diverse set of AI applications are landing in the hands of consumers. Coding companions were the start. But now chat companions—like those in customer service—are becoming a new battleground for user experience.

These interactive use cases, and escalating performance demands from users, are putting the spotlight on batch sizing and opening the door to new ways to think about AI inference pipelines.

The tension with batch size

Batch size in classic GPUs is one of the cleanest examples of the tradeoffs made in an AI application.

- Increasing the batch size allows a GPU to handle more “jobs” at once, but each individual job processes more slowly. Handling more jobs means being able to handle a larger number of users or requests coming in, leading to a better ROI.

- Reducing batch size allows a GPU to handle fewer jobs, but each job gets more dedicated resources. That means it provides a significantly better performance relative to GPUs running large batch jobs, but much of the oomph of a GPU sits idle.

You could run a GPU with a batch size of one, where a single user gets a truly magnificent interactive experience—but is taking up the entire GPU and leaving a lot of it idle. That means it takes longer to earn back what you spent on that GPU, and even longer to start generating a real ROI on it. If you jack that batch size up into the thousands, your ROI goes up but you get a lot of users that walk away feeling pretty meh.

It represents a kind of elegant way of measuring trade-offs labeled on a knob you can actually twist around. Hitting that bullseye for each app is one of the most important decisions in building a successful app. But it also highlights the opportunity for re-thinking how to handle batch sizing at a variety of levels all the way down to the hardware itself.

That knob also maps almost directly to the latency of an application, which is one of the key governing factors for the success of an application. Customers generally really don’t like waiting for stuff. Latency now governs the whole user’s experience behind an interactive app.

Moving forward with small batches and custom accelerators

The difference between the magnificent and the meh experience is what’s going to govern the success of AI-powered applications going forward.

GPUs were built with peak throughput in mind, but a user probably isn’t going to care about the difference between 330 tokens per second and 360 tokens per second if they were stuck in line. They’ll just bail, and they’ll take their revenue with them.

Batch size provides one of the cleanest mappings to how to weigh the trade-offs between user experience and the actual cost of the GPUs you have in production. That tension also highlights some of the key weaknesses in GPUs and the opportunities custom inference-optimized accelerators have.

Newer, multimodal applications that involve agentic flows are also making the tradeoff more complicated. Snappier applications, such as ones that involve voice agents, need extremely low latency, but still have the ability to scale up.

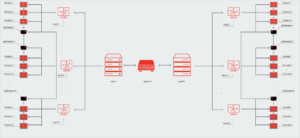

Heterogeneous pipelines offer an opportunity to bring in different kinds of hardware that perform best in a variety of different scenarios. For example, you might want to pick hardware that handles pre-fill for a transcription model. That’s really specific, but thanks to emerging frameworks and new types of hardware, it’s an actual option for developers.

Those same pipelines might also have holes for custom accelerators that behave differently with different batch sizes than GPUs do. GPUs can excel at pre-fill or training jobs, but small-batch, high-utilization hardware is what is really going to get a thumbs up from a CFO.