The first cloud computing revolution triggered a tsunami-grade buildout for data centers—both for emerging hyperscalers like Amazon Web Services, and companies re-orienting their systems around cloud-based operations. At the time, we were talking about a bunch of CPUs that you were connecting to the internet.

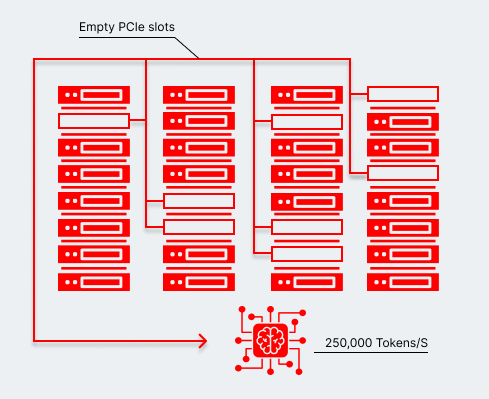

Now we’re dealing with a whole new surge in data center demand to support the explosive growth of AI tools, but with a twist: we already have tons of facilities worldwide with an abundance of PCIe slots.

Supporting next-generation AI doesn’t necessarily mean constructing massive gigawatt-scale GPU-focused data centers. You could also walk up to a rack in an existing facility, see if it has a few PCIe slots free, and drop a custom accelerator in to deliver responsive, AI-enabled functionality to dozens of users.

These legacy servers weren’t built to handle the power draw of today’s AI hardware. A report from the Uptime Institute noted that 81% of surveyed companies said racks in the 1-9 kW range were most common. That envelope can’t really sustain ultra-high-performance GPUs like GB200s, which can draw up to 1.2kW per card.

Accommodating that tier of compute usually requires one of two things: ripping and replacing entire racks with GPU-optimized ones; or expanding the floor plan and electrical footprint to build new ones. Neither option is ideal—or cheap.

Custom AI chips have a key advantage: they can slide directly into existing servers using standard interconnects. State-of-the-art GPUs often require a fundamentally different infrastructure, but low-power PCIe-based, air-cooled accelerators can run in any server with an open slot.

Custom accelerators really only need two things: space and available power.

Every unused PCIe slot and leftover wattage is potential AI throughput going unused

Let’s say you’ve got 4kW of headroom in a rack. You could:

- Leave it unused, burning money on idle real estate.

- Assign it to non-critical tasks and miss out on AI value. Or…

- Populate those slots with low-power ASICs and start generating tens of thousands of tokens per second—no architectural changes required.

4kW might not sound like much, but it’s enough to deploy several memory-optimized accelerators to run speech-to-text, text-to-speech, or even multimodal workloads.

All those idle slots quickly add up to a functional infrastructure for high-performance AI-based applications. One spare node might handle a lightweight model on its own. Multiple spare nodes across multiple racks translate to even larger models for more complex applications and tasks.

In this case, inefficiency actually offers an opportunity. No facility is perfectly optimized—especially for the strain of modern AI inference. But the AI revolution offers a way to reclaim all those inefficiencies generated from the relentless infrastructure buildout in the first wave of the cloud revolution

Adding accelerators doesn’t necessarily require upending your entire infrastructure. You can offload parts of inference tasks to these devices while keeping your existing GPUs. For instance, you might split workloads—using a GPU for pre-fill and a memory-optimized chip for decoding.

Filling out those empty slots may not be flashy like a brand-new hyperscale facility, but it lets you begin serving effective AI workloads right away.

The future is disaggregated

All those unused PCIe lanes are effectively dormant inference networks. One path is building new infrastructure. But another, more immediate route is retrofitting what already exists. Think of it as recycling: plugging holes in racks already drawing power.

And in a world where responsiveness is table stakes, ignoring that unused capacity is a missed opportunity. If your competitors are tapping into every watt and slot they can, you can’t afford to fall behind.

AI models today thrive in interactive applications—but that may shift. Development is accelerating. Agent frameworks that once felt like science fiction are now standard tooling for multimodal use cases, all of which demand fast reaction times.

The best part? You don’t have to tear everything down. You don’t need more racks. You just need to recognize the latent infrastructure you already own and start putting it to work.