Recent breakthroughs in Reasoning AI have shifted focus towards scalable inference-time compute. Enterprises want to leverage these AI advancements to deliver real-time, interactive user experiences while also serving a vast user base cost-effectively. These objectives are often in conflict, and there is an increased demand for new inference solutions that can do it all. This blog examines the impact on inference hardware, inherent tradeoffs, and innovative solutions that redefine what’s possible.

Need for speed – AI demands low latency inference

Interactive Generative AI applications demand near-instant responses to provide a fluid user experience. Whether it’s a reasoning agent, conversational chatbot, coding assistant, or interactive video generation, low-latency inference is becoming a key enabler and differentiator. As models ‘think’ more and generate multiple chains-of-thought, inference time increases significantly, and it becomes more critical to minimize response time for optimal engagement.

Scaling smart – batched throughput drives better ROI

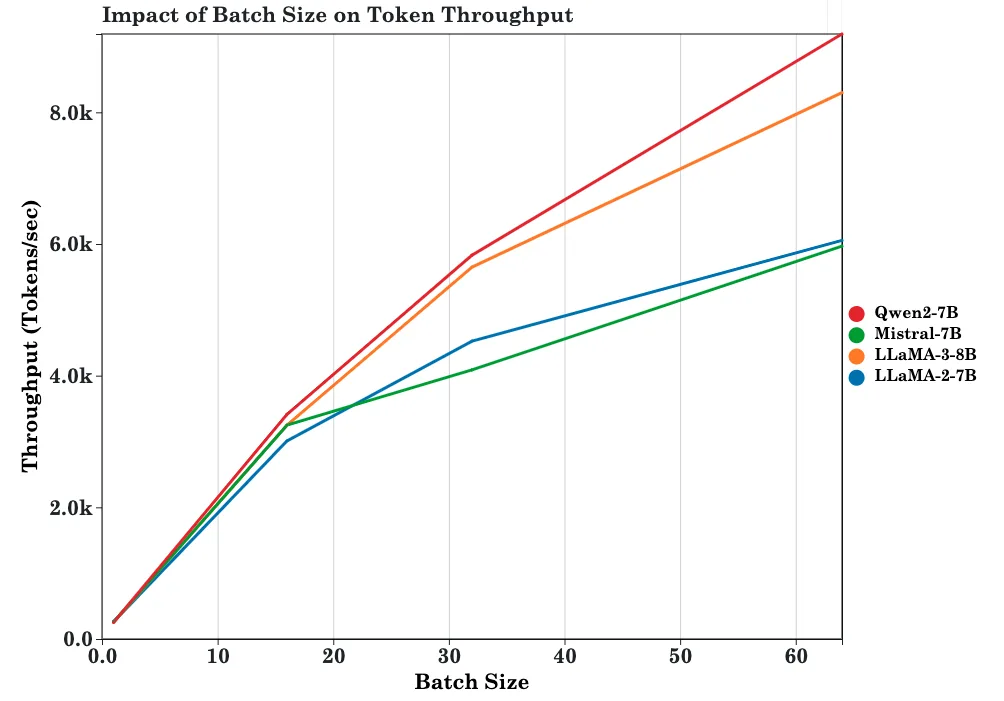

Cost-effective, large-scale AI deployments need datacenters optimized for inference. Serving costs include both capital investments in inference compute and operating expenses such as power, hosting, and maintenance. Rather than focusing solely on interactivity, the objective is to maximize inference output for given hardware capacity and power limits – measured as total token throughput per rack and per watt. This is achieved by batching i.e., processing inference requests concurrently to improve resource utilization. Costs drop as resources are amortized over multiple users – yielding better ROI for enterprises.

Tug-of-war – Dual memory bottlenecks in Generative AI

Generative AI models are unique workloads as they are inherently memory bound.

A) Memory bandwidth bottleneck- each generated token requires reading all model parameters and intermediate data (KV cache) from memory. This bottleneck intensifies in reasoning models with larger size and longer context window. Achieving lower latency requires tremendous scaling of memory bandwidth.

B) Memory capacity bottleneck- increasing token throughput by batching necessitates greater memory capacity, as KV Cache footprint increases with batch size.

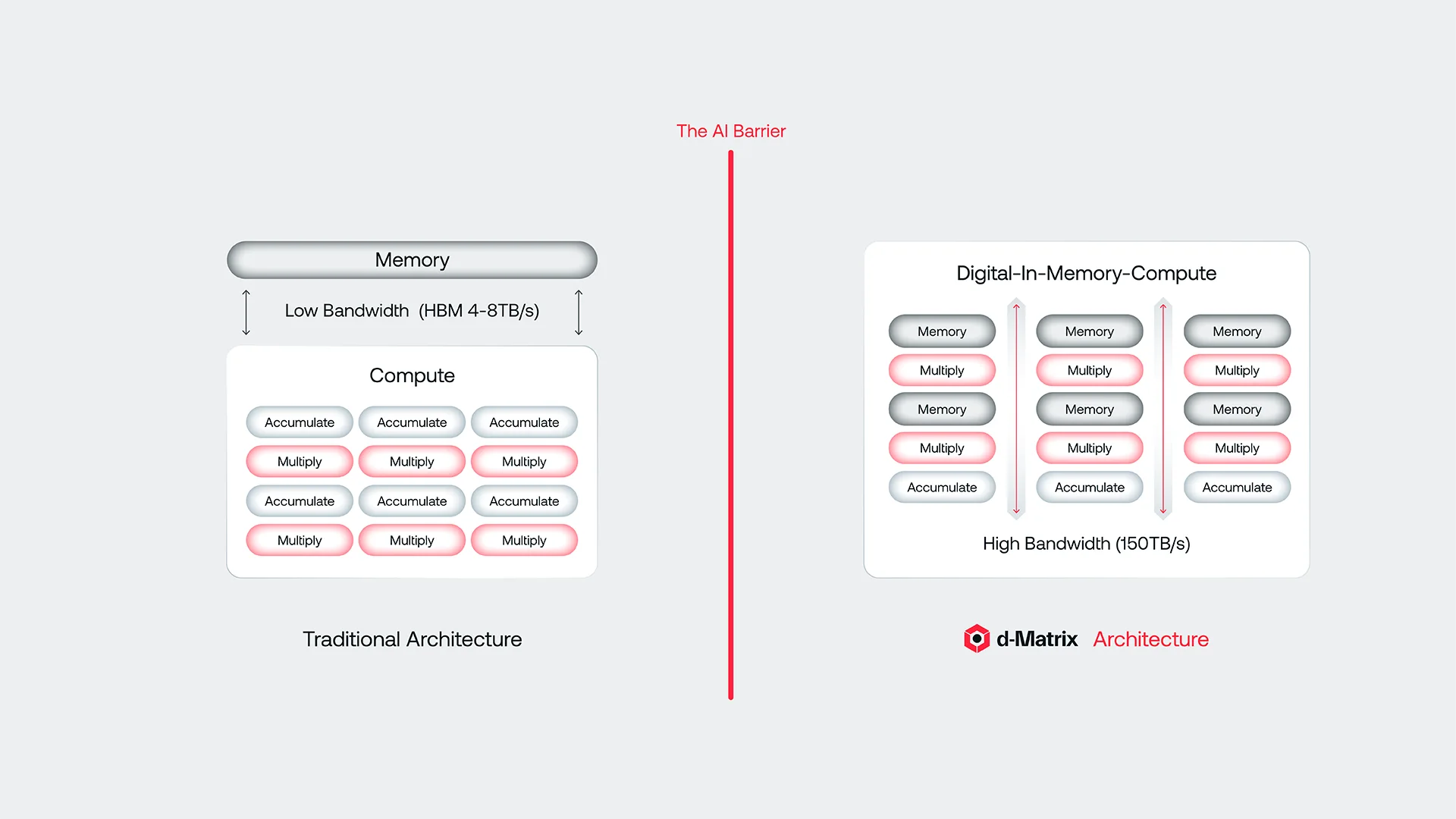

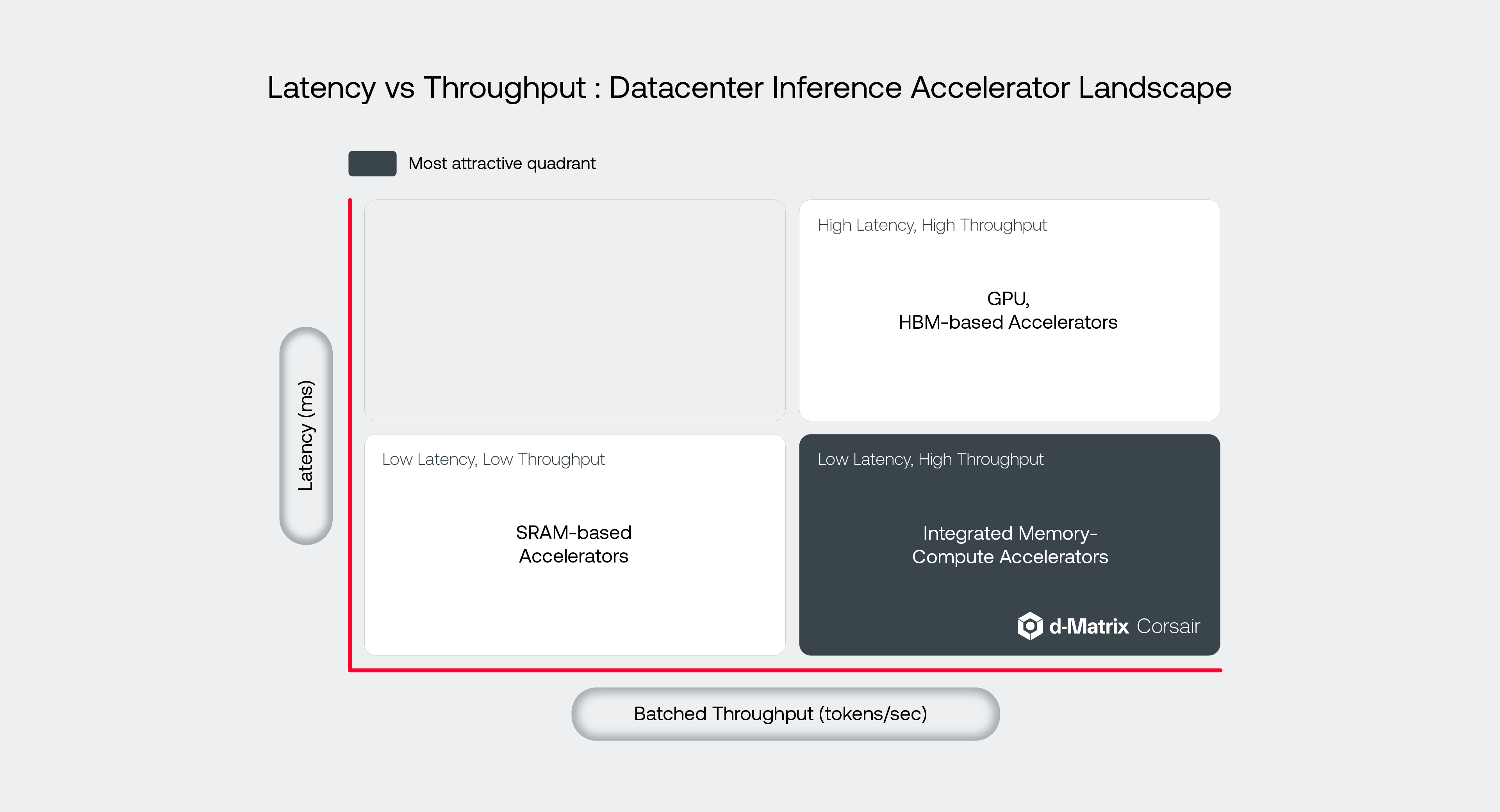

Different memory technologies have been used to address one constraint, but not both. GPUs rely on High Bandwidth Memory (HBM) which has inherent limitations in scaling bandwidth in a practical, power-efficient manner. Since memory is physically separate from compute, the bandwidth is limited by pin count and power for data transfer over long distances. Hence, HBM based accelerators struggle to deliver low latency inference. However, HBM offers high memory capacity and can enable large batch sizes, effectively increasing token throughput and reducing per token costs.

Some custom AI accelerators, by contrast, leverage on-chip SRAM to achieve drastically higher memory bandwidth and reduce token latency. However, typical SRAM designs offer low-density and limited memory capacity per chip, which restricts batched throughput. Even going to wafer-scale does not resolve this challenge, given that popular generative models contain tens to hundreds of billion parameters. Fitting such models in SRAM requires distributing them across several racks, which incurs additional expenses, and can become cost prohibitive if token throughput does not scale proportionally. As a result, these solutions can become more expensive compared to GPUs.

The DeepSeek Moment

In this short talk, d-Matrix CTO Sudeep Bhoja discusses the release of the Deep Seek R1 model, highlighting its impact on inference compute. He discusses the evolution of reasoning models and the significance of inference time compute in enhancing model performance.

Learn more about d-MatrixBest of both worlds – d-Matrix’s innovative approach

Delivering “low-latency batched inference” demands a fundamental rethinking of accelerator design from first principles – one that achieves both high memory bandwidth and high memory capacity. It requires redesigning compute elements to be more efficient, freeing up area and power budget to maximize memory capacity. Ultimately, the goal is to develop a scalable and high-density architecture.

To give an analogy, imagine traveling from San Francisco to New York. Now consider trains – they can carry thousands of passengers (i.e., handle high batch sizes) but it would take days of travel (high latency) to get there. Now consider a commercial airplane. Travel time is reduced to hours (i.e. ultra-low-latency) while still carrying hundreds of passengers (batched throughput) to remain commercially viable. The steam engine revolutionized transportation but decades later a completely new jet engine was needed to redefine travel speed.

At d-Matrix, we’ve built the new jet engine for the inference age.

d-Matrix Corsair accomplishes this by tightly integrating memory and compute and redesigning memory. This innovative design delivers an order of magnitude higher bandwidth than GPUs and outperforms existing custom inference solutions. This results in ultra-low-latency token generation and superior user interactivity.

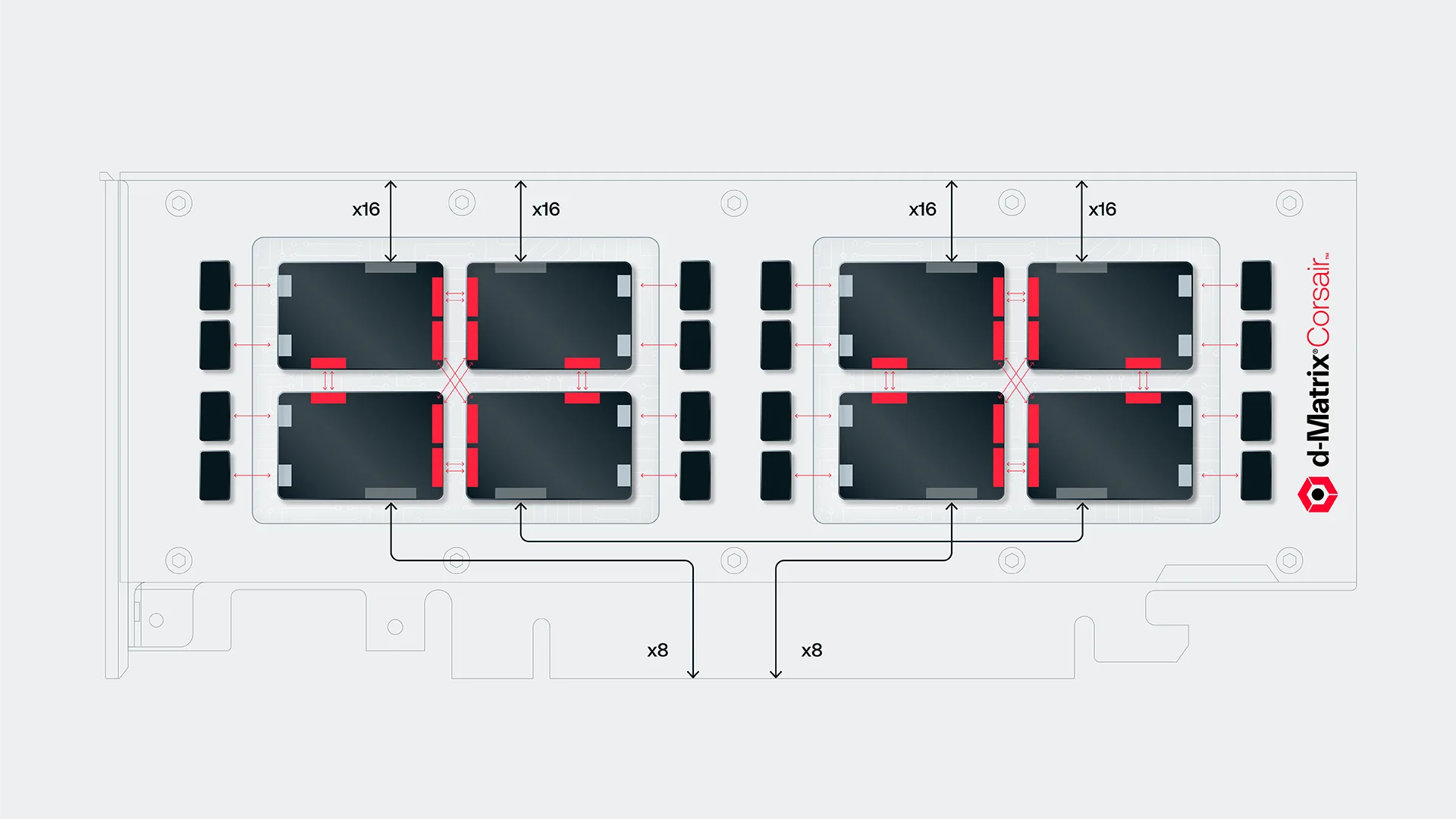

For high compute efficiency, Corsair employs a novel Digital In-Memory Compute (DIMC) architecture that leverages custom matrix-multiply circuits and block floating point numerics. Scalability is achieved using a chiplet-based design where a single Corsair package contains 4 chiplets interconnected via low-power die-to-die links. Each Corsair PCIe card houses two such packages (8 chiplets) providing a total of 2GB ‘Performance’ Memory – far exceeding the capacity of comparable alternatives – all within a 600W power envelope. To serve large generative AI models, Corsair cards are designed to efficiently scale out to a rack over standard interconnect fabrics (PCIe and ethernet).

As a result, Corsair delivers significantly higher Performance Memory capacity per rack. Relative to GPU, this translates to 10 times higher interactivity and 3 times better cost performance. Moreover, Corsair supports low precision numerics (MXINT4) that has less memory footprint, further widening its lead in interactivity and throughput metrics.

Redefining AI inference of the future

Widespread AI adoption hinges on reconciling the need for both low latency and high batched throughput. As model sizes grow and user demands intensify, the trade-offs of traditional hardware architectures will become even more pronounced. Inference platforms like d‑Matrix Corsair demonstrate that a no-compromise solution is possible when designed from first principles and tailored for real-world generative use cases – unlocking unprecedented performance and efficiency for next-generation AI inference.