AI adoption is accelerating at an unprecedented pace, but the economics of running AI models are becoming unsustainable. And the cracks are beginning to show. As more people rush to use the latest models and the services built on top of them, AI service providers are raising prices and throttling usage to compensate for expensive inefficiencies in their underlying data center infrastructure.

But make no mistake. The challenge isn’t simply about compute. It’s about memory. And throwing more GPUs at the problem won’t solve it.

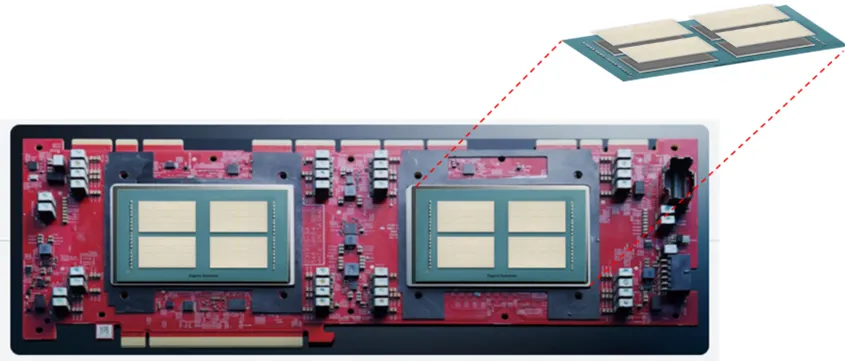

At d-Matrix, we’ve built our company by spotting systemic challenges early. Years ago, when most of the industry was still focused on training, we recognized that inference would become AI’s true cost center. And we made an early bet on accelerating transformer inference. That foresight shaped the memory-obsessed, chiplet-based architecture behind d-Matrix Corsair, the world’s first digital in-memory compute inference accelerator.

And today, we’re taking the next step, announcing we’re bringing a state-of-the-art implementation of 3D stacked digital in-memory compute, 3DIMC™, to our roadmap. Our first 3DIMC-enabled silicon, d-Matrix Pavehawk™, which has been 2+ years in the making, is now operational in our labs — a milestone for the industry. We expect 3DIMC will increase memory bandwidth and capacity for AI inference workloads by orders of magnitude and ensure that as new models and applications emerge, service providers and enterprises can run them at scale efficiently and affordably.

When Did Memory Become a Problem?

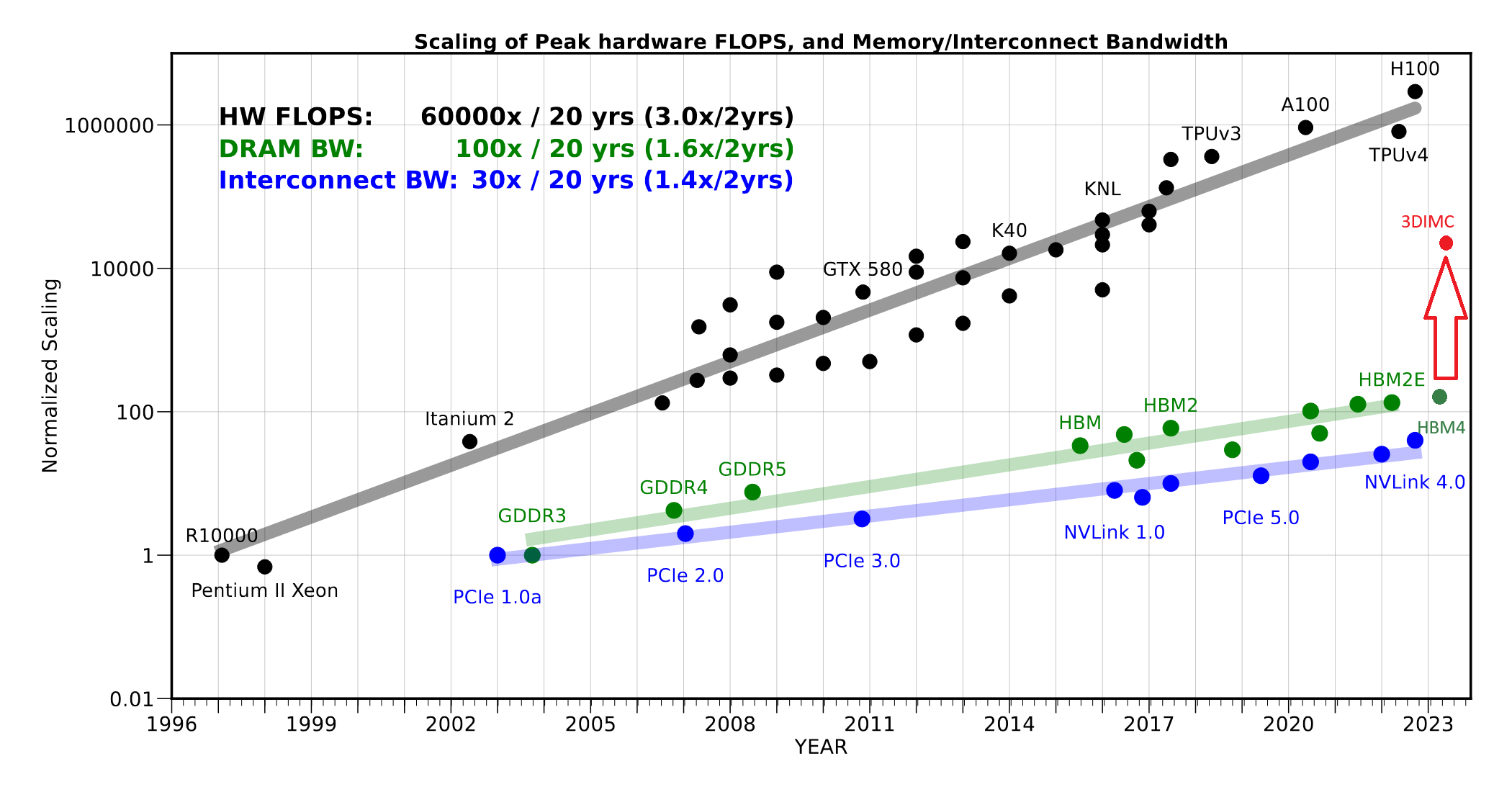

The term “memory wall,” which refers to the performance bottleneck that often occurs in high-performance compute environments, was first coined in 1994, and the “wall” has become significantly higher over time. Industry benchmarks show that compute performance has grown roughly 3x every two years, while memory bandwidth has lagged at just 1.6x. The result is a widening gap where pricey processors sit idle, waiting for data to arrive.

d-Matrix 3DIMC technology promises to help close the gap.

This trend matters even more today because inference — not training — is quickly becoming the dominant AI workload. CoreWeave recently stated that 50% of its workloads are now inference, and analysts project that within the next two to three years inference will make up more than 85% of all enterprise AI workloads.

Every query, chatbot response, and recommendation is an inference task repeated at massive scale, and each one is constrained by memory throughput. Today’s AI applications demand better memory.

Taking the Lead on Memory-Forward Solutions

d-Matrix was founded to tackle problems like this head-on. We’re not repurposing architectures built for training — we are designing inference-first solutions from the ground up.

Corsair’s first customers, which include some of the world’s largest hyperscalers and neoclouds, are witnessing how our memory-forward design can dramatically improve throughput, energy efficiency, and token generation speed compared to GPU-based approaches.

Our chiplet-based design enables not just greater memory throughput, but also incredible flexibility, making it possible to adopt next-generation memory technologies faster and more efficiently than monolithic architectures.

Building on this foundation, our next-generation architecture, Raptor, will incorporate 3DIMC into its design – benefiting from what we and our customers learn from testing on Pavehawk. By stacking memory vertically and integrating tightly with compute chiplets, Raptor promises to break through the memory wall and unlock entirely new levels of performance and TCO.

We are targeting 10x better memory bandwidth and 10x better energy efficiency when running AI inference workloads with 3DIMC instead of HBM4. These are not incremental gains — they are step-function improvements that redefine what’s possible for inference at scale.

The Path Forward

As stated at the beginning of this article, AI will simply not be able to scale if costs continue their current trajectory. The industry is just beginning to confront this reality, but the good news is d-Matrix has been preparing for it all along.

By putting memory requirements at the center of our design — from Corsair to Raptor and beyond — we are ensuring that inference is faster, more affordable, and sustainable at scale.

The memory wall has been decades in the making. With our commitment to memory-centric design, d-Matrix is blazing the trail beyond it and building a sustainable path for the future of AI.