Finding the middle ground: how smaller models will unlock the next wave of AI

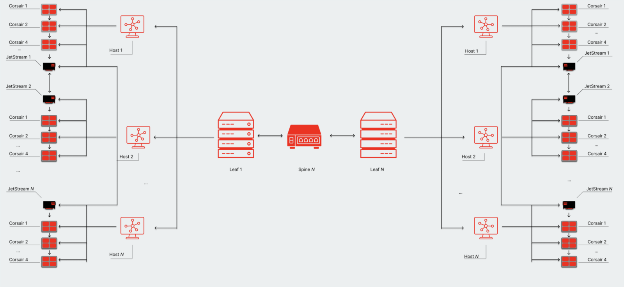

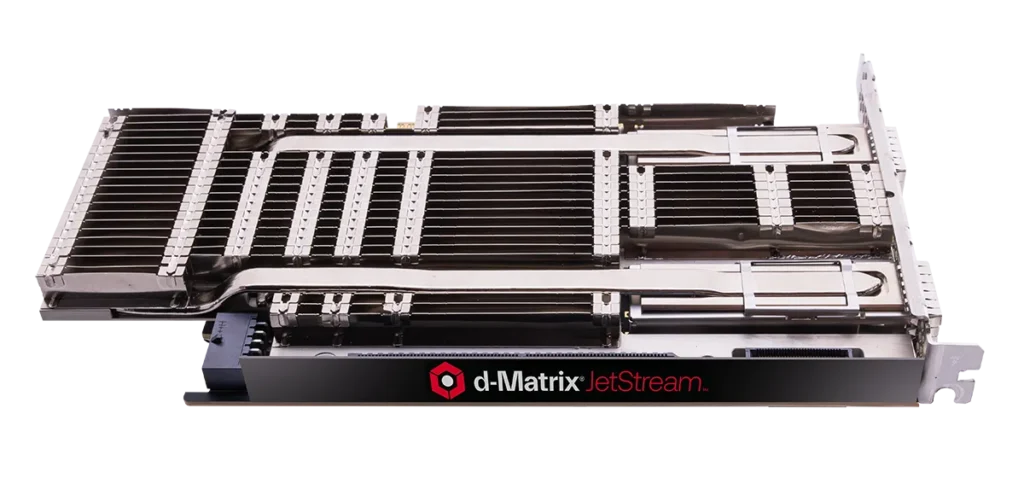

Delivering high-quality AI-powered applications historically relied on massive models. That came with significant scaling limitations, as deploying models with more than 100B parameters and maximizing toke generation doesn’t scale up without losing latency… Read More