d-Matrix’s advantage over classic GPU architecture is extremely low latency, ultra-efficient small batch inference. We approach each of those problems with a large range of techniques and must excel at all three. Each bottleneck currently has its own technology: Corsair, 3DIMC, and now, JetStream.

There’s no one magic sledgehammer to send latency to zero. Instead, we worked to shave off every possible microsecond possible per inference. To accomplish that while staying in the bounds of ethernet-based standards, it was clear we needed a new transparent NIC.

Our inference approach to inference using disaggregated compute represented an opportunity to revisit the entire hardware and software stack, including the NIC. After Corsair, it represented the next lowest-hanging fruit.

Starting with disaggregated compute

We consider there to be three total barriers to fast, efficient, high-performance AI inference: compute, memory, and I/O.

Maximizing utilization while maintaining low latency is the key trade-off here, which we approached by targeting small batch sizes. We do this through disaggregated direct in-memory compute.

We have system where each Corsair card has 2GB of high-performance SRAM memory, enabling extremely fast and efficient tensor parallelism. Our disaggregated compute approach spreads the weights and activations across each compute engine. The total sum of all SRAM in a Corsair-driven system determines the model size available, along with governing batch size and context window considerations.

Each compute engine is given a pre-defined piece of the inference. For example, one card might be responsible for components of a set of layers of an attention head.

This approach gives us high throughput and low latency. Corsair enables significantly faster reasoning models and agentic flows across multiple modalities while maxing utilization at small batch sizes. At the appliance level it works well with smaller models, but larger models are a rack-level problem.

As such, the volume of Corsair cards needs to scale out to meet the total memory capacity of larger models. There are a lot of ways to attack that, but the next microseconds naturally came by revisiting the I/O fabrics enabling Corsair.

How JetStream works

Corsair nodes support accelerator-to-accelerator communication across the same PCIe plane. This significantly improves latency by enabling Corsair accelerators to activate ahead of time to reach the instruction to receive the data, while the prior accelerator already has a mechanism for sending the data as soon as it’s ready.

However, in classic architecture, a host dictates the inter-node behavior allowing NIC-to-NIC data transfer to engage with the next node. The host provides instructions to the NIC to write data to a buffer to send it to the NIC of the next node, which also must receive instructions to wait for that data. This requires multiple interactions with the host before the data is available to the next accelerator.

JetStream extends the accelerator-to-accelerator communication from intra-node to inter-node. Rather than requiring the host to issue instructions to govern NIC behavior, JetStream can freely transmit data to the next node, with the transfer behaving the same as if it were intra-node.

JetStream extends the decoupled execution of Corsair to multiple nodes. JetStream expands the capabilities of Corsair by enabling accelerator-to-accelerator communication and synchronization.

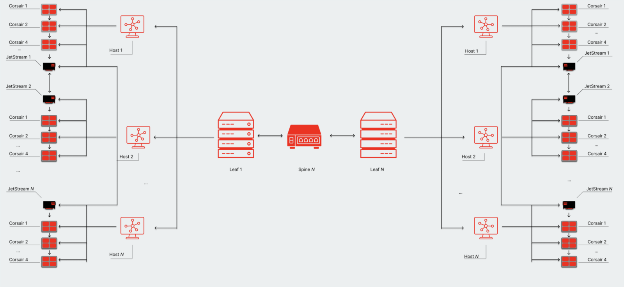

At time of inference, each host sends instructions to each accelerator. For example, the first accelerator is given instructions to execute its layer shared with its prompt and weight shard, while the rest are given instructions to wait for data from the first accelerator. Each JetStream connects four corsair cards to a leaf switch using ethernet.

In a standard RoCE v2 system, the current industry gold standard, the data from that completion returns to a host controller to pass it to the second controller. Multiple passes involved in this structure:

- Each accelerator has a send and receive calls, but several instructions exist prior to the receive and after the send calls.

- In standard execution, instructions aren’t issued until the receive instruction is complete. The accelerator also must complete the instructions after the send occurs.

- The host governs the instructions ordering the data to transfer to the NIC on the PCIe plane, after which the NIC can request data from the accelerator to move to the buffer.

- When moving to the next server, the next NIC must receive instructions to receive the data.

- The host has to send instructions to the NIC to copy data from the accelerator

- The NIC has to send a request to accelerator to read buffer.

And so on and so forth! All these pre- and post- send/receive executions add up over time, especially at a rack-level deployment. But the interstitial, where the actual stuff occurs, like our GEMMs, doesn’t necessarily need all that mess on the edges and instead just needs a stream of data and a “go” signal.

JetStream enables accelerators to issue those “go” signals along with the data necessary for the next accelerator. This is done by taking advantage of JetStream-to-JetStream connectivity through a dedicated switch.

Rack-scale and multi-rack solutions with standard RoCE v2 systems accrue a large penalty scaling linearly due to the inherent costs of a system where the control plane and data plane both require a series of handshakes.

With JetStream, each server contains its own direct path to a leaf switch that can transfer data immediately to the next server. By the time the previous pipeline is complete, the second pipeline has already received instructions to wait for data before execution.

This removes multiple host-accelerator hops in the data transfer process, enabling intra-pipeline data transfers that accrue a latency penalty of less than 2µs. Each rack-level leaf switch is connected to spine switches across multiple racks in an all-to-all configuration. JetStream enables each server connected to a dedicated rack leaf switch to behave as if it is on a single plane with all other servers.

Why I/O

JetStream exists in the first place because it was the next obvious spot where we could eliminate a significant amount of latency. Work continues on Corsair and our stacked 3DIMC memory solution, but it’s hard to ignore the opportunity to shave off more than 2µs per pipeline transfer.

JetStream also continues to offer optionality for customers. We work with partners to create a fully qualified Rack-level AI inference solution in SquadRack, which you can purchase through partners like SuperMicro.

Building a high-performance I/O system also enables us to be prepared for changes in the AI model ecosystem. The SquadRack blueprint can support expert parallelism to support the growing popularity of MoE models—as well as scale out to support the size of those models in the first place.

Each bottleneck will also continue to present new challenges than the ones they present today. JetStream is our “first shot” after the I/O bottleneck to enable rack-scale inference solutions with Corsair and JetStream.