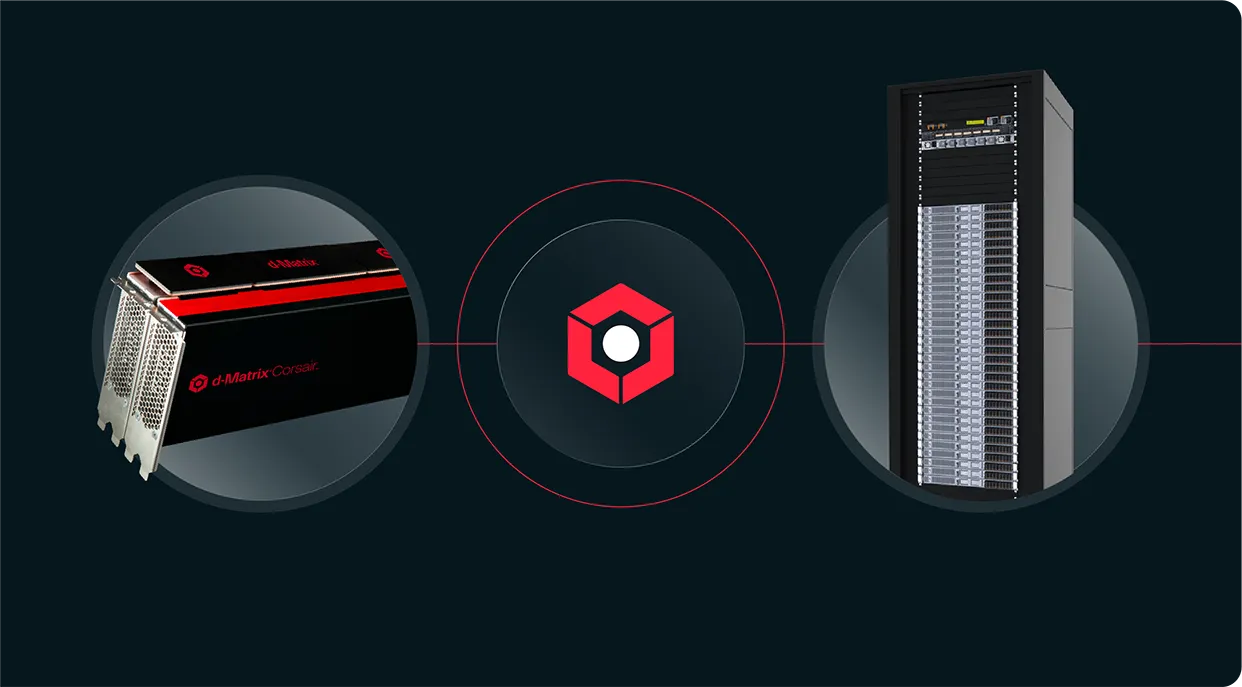

Meet Corsair™,

the world’s most efficient AI inference platform for datacenters

60,000 tokens/sec at 1 ms/token

latency for Llama3 8B in a single server

latency for Llama3 8B in a single server

30,000 tokens/sec at 2 ms/token

latency for Llama3 70B in a single rack

latency for Llama3 70B in a single rack

Redefining Performance and Efficiency for AI Inference at Scale

Blazing Fast

x

interactive-speed

Commercially Viable

x

cost-performance

Sustainable

x

energy-efficiency

Performance projections for Llama-70B, 4K context length, 8-bit inference vs H100 GPU, results may vary

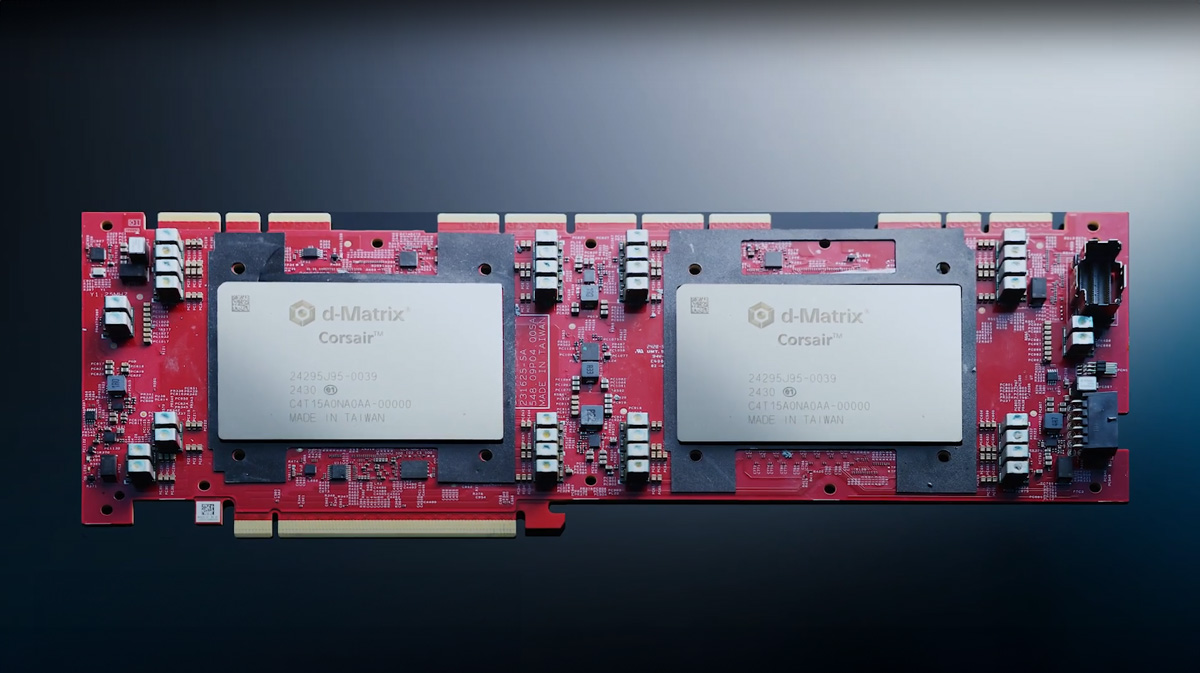

Built without compromise

Don’t limit what AI can truly achieve and who can benefit from it. We’ve built Corsair from the ground up, with a first-principle approach . Delivering Gen AI without compromising on speed, efficiency, sustainability or usability.

Performant AI

d-Matrix delivers ultra-low latency high batched throughput, making Enterprise workloads for GenAI efficient

Sustainable AI

AI is on an unsustainable trajectory with increasing energy consumption and compute costs. d-Matrix let’s you to do more with less.

Scalable AI

Our purpose-built solution scales across models and is enabling enterprises and datacenters of all sizes to adopt GenAI quickly and easily.