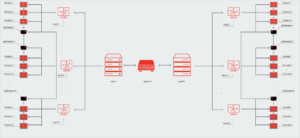

AI infrastructure spending has seen a remarkable surge, with IDC reporting a 97% increase to $47.4 billion in the first half of 2024. The revenue distribution reveals that OEMs and ODMs predominantly benefited from servers, capturing 95% of the expenditure, while the remaining 5% was allocated to storage. Cloud-based AI servers, including hosted iron, accounted for 72% of AI server revenues, amounting to $32.4 billion in the initial half of the previous year, leaving $12.6 billion for on-premises machinery. IDC’s insights also project that accelerated machines, incorporating GPUs and other XPUs, drove 70% of AI server sales in 1H 2024, with an expected increase to 75% by 2028. For more details, refer to the IDC report here.

As this trend continues, enterprises and data centers are actively seeking ways to seamlessly integrate GenAI into their operations to enhance model efficiency, speed, and cost-effectiveness while reducing power consumption. In response to this demand, d-Matrix is emerging as the provider to deliver on these needs.

IDC InfoBrief: sponsored by d-Matrix, Inference at Scale for Generative AI Models Can Be Faster, Cost Efficient, and Power Efficient, IDC# US52699124”