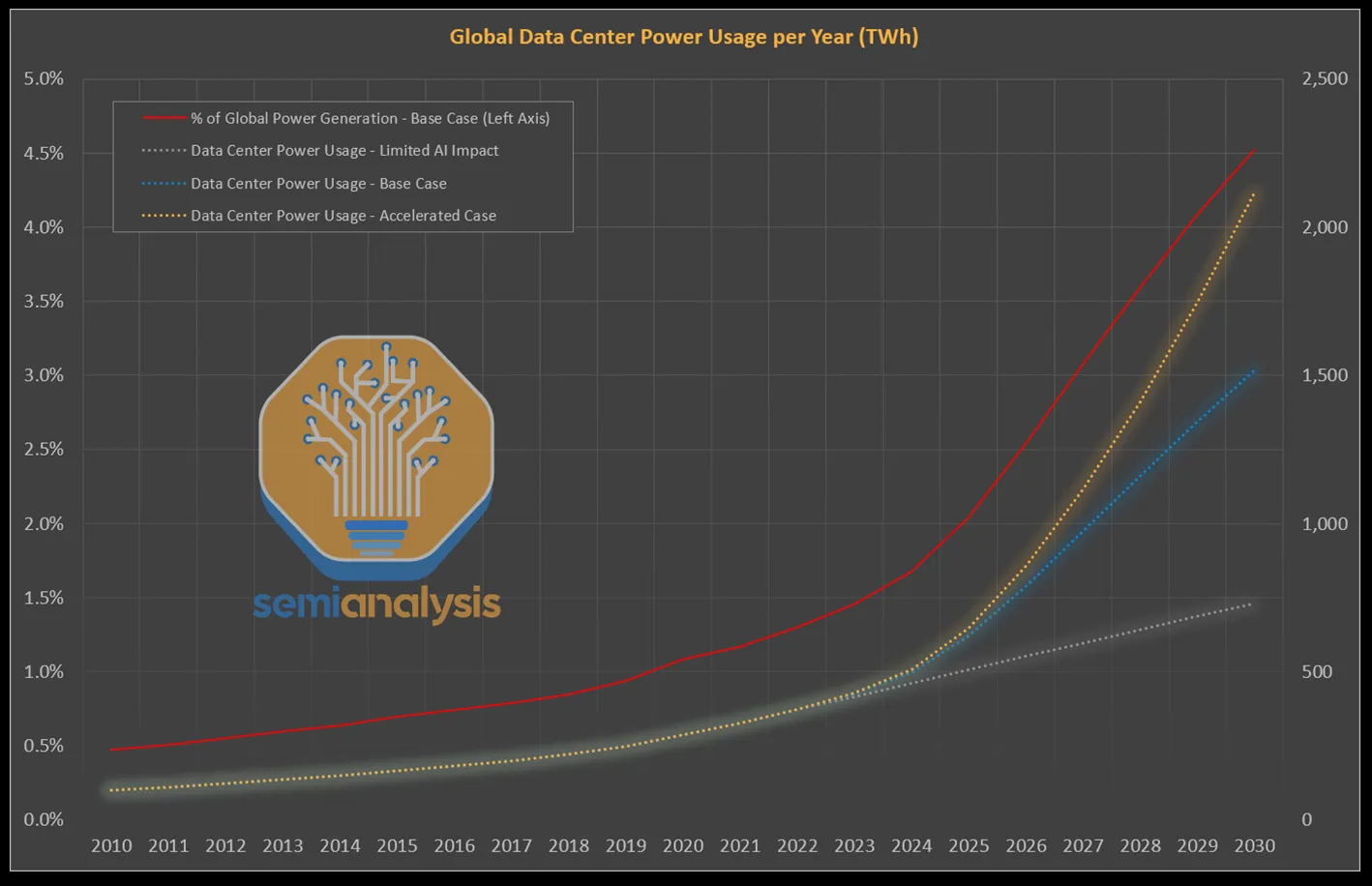

Since the launch of ChatGPT in November 2022—just two and a half years ago—Generative AI (GenAI) has seen unprecedented growth. This has led to a dramatic increase in demand for datacenter infrastructure. Meta, Google, and Microsoft, for example, are collectively expected to spend over $200B in 2025 alone on datacenter buildout, while OpenAI’s Stargate project involves investing $500B over four years in data centers.

The growing need to train better Large Language Models (LLMs) and to serve them at scale to billions of users through AI inference is driving all that demand, with companies like Meta running clusters of hundreds of thousands of GPUs. New applications like Cursor and ChatGPT, a massive new pool of users for those apps in the hundreds of millions, and increased engagement for each of those users have upended the classic way of thinking about data centers.

This blog outlines the challenges faced by datacenters across all fronts: the dramatic spike in cost, power and performance requirements, and scalability challenges driven by this sharp increase in Generative AI demand.

If you are new to AI inference, check out our first blog in this series – What is AI Inference and why it matters in the age of Generative AI

Token economics

Meeting the needs of GenAI requires massive capital investment in large-scale AI infrastructure. While part of this capacity supports model training, a large portion is needed to serve AI inference. Despite increasing capacity, OpenAI still rate limits its models—especially when it releases new ones—for both APIs and for end users in ChatGPT due to constrained compute availability. Operational expenses have also increased significantly. GenAI workloads are far more compute-intensive than traditional cloud computing, driving up electricity and cooling costs to operate power-hungry GPUs. These upfront and operational expenses raise the total cost of serving the atomic unit of GenAI output: tokens. Passing operational costs early in a product’s life traditionally leads to slower adoption—you want to try something before you really invest in it—but subsidizing usage to encourage growth can strain margins and profitability for the inference operators.

Gigawatt-scale problems

Serving larger models and with better throughput is pushing higher power density across all infrastructure layers, including accelerators, servers, racks, and entire datacenters. Research firm SemiAnalysis indicates that Nvidia’s next-generation GPUs may consume up to 1800kW per GPU, four times the power of A100 GPUs.

AI racks like those with Nvidia’s GB200 can consume more than 100kW today—more than 5x the standard 20KW required for cloud computing racks—with some roadmaps, like Nvidia’s Rubin Ultra, going beyond half a million watts per rack soon. AI is reshaping datacetner design to accommodate this shift, building them physically closer to power generation sources and accelerating the shift to liquid cooling. (Meta, for example, is working on a cluster that can scale up to 5GW called Hyperion.) These shifts challenge not only datacenter design and supply chain, but also local power grids. Datacenter operators are exploring more energy-efficient compute solutions to alleviate these concerns.

Reliability and user experience

Generative AI workloads are inherently memory bound and do not run efficiently on GPUs. This results in slower token generation speeds and poor user experience during AI inference on classic GPU architectures relative to inference-optimized architectures. For example, image generation in ChatGPT using GPT-4o in some cases can take over a minute. To mitigate these issues due to increased demand, datacenters will need to expand capacity and deploy inference-optimized accelerators to ensure responsiveness and service reliability.

Scaling complexity

Supporting large-scale model training and inference requires massive clusters of AI accelerators. Some training clusters, like the ones we talked about above, have already surpassed 100,000 GPUs. Frontier model providers are now building towards 300,000+ GPUs spread across multiple campuses, per SemiAnalysis.

While inference can usually run on a server or rack, serving hundreds of millions of users requires large inference-optimized clusters. Orchestrating and managing such a cluster, while ensuring high hardware utilization, low latency, and system reliability is an incredibly complex problem – it is as much a hardware challenge as it is software. High-speed interconnectivity between accelerators and sophisticated scheduling and load balancing software plays a critical role. Large enterprises and data center operators are now investing heavily in purpose-built orchestration stacks to manage and optimize these clusters.

Rethinking the full stack

The goal of AI datacenters is to deliver performant, reliable GenAI experiences while keeping total cost of ownership in check. This requires maximizing utilization of compute and energy resources to get the most out of the infrastructure. At the datacenter level, efficient power delivery and cooling designs are critical. At the compute platform level, every layer of the inference stack must be optimized – from accelerator architecture to compilers, from runtime software to orchestration systems, and even AI models themselves. Full-stack hardware-software codesign is essential, and it starts with first principles thinking. We will dive deeper into this in our next post.